All thanks to Google’s new AI model, Gemini, Alphabet, the parent company of Google, is in deep trouble. Some users have pointed out that the platform has a strong bias against Indian Prime Minister Narendra Modi and believe it is justified to label him a fascist.

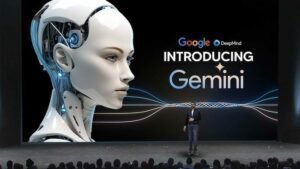

Google’s Gemini AI has been drawing some criticism from people on Twitter for being a little too woke, so much so that it made some glaring mistakes when generating images. For instance, it would often create images of historical figures and make them people of color. Also, there was a consensus among people that Gemini was reluctant to show white men and women as it struggled to maintain historical accuracy and balance it with inclusivity.

The Ministry of Electronics and Information Technology (MeitY) is preparing to issue a formal notice to Google concerning contentious responses generated by its AI platform, Gemini, particularly about Prime Minister Narendra Modi, according to a report by The Indian Express.

A user on X revealed that Google’s AI system, previously known as Bard, had provided an inappropriate response to a user query, sparking concerns within the ministry.

A screenshot shared on social media revealed Gemini’s response when asked whether PM Modi is a ‘fascist,’ stating that he has been “accused of implementing policies some experts have characterized as fascist.” In contrast, when asked a similar question about former US President Donald Trump, the AI redirected the user to conduct a Google search for accurate information

Similarly, when the AI bot was asked if Ukraine’s Volodymyr Zelenskyy was a fascist, it started speaking about nuance, balance, and context.

Minister of State for Electronics and Information Technology Rajeev Chandrasekhar expressed concern over these incidents, citing potential violations of Rule 3(1)(b) of the Intermediary Rules of the IT Act and other criminal code provisions. These rules mandate intermediaries like Google to exercise due diligence in managing third-party content to retain immunity from legal liabilities.

This development highlights the ongoing debate over the regulatory framework governing generative AI platforms like Gemini and ChatGPT. Google recently apologized for inaccuracies in historical image generation by its Gemini AI tool, following criticism over its portrayal of historical events.

A senior official from the Ministry disclosed that this isn’t the first instance of biased responses generated by Google’s AI. The ministry intends to issue a show cause notice to Google, demanding an explanation for Gemini’s contentious outputs. The official stated anonymously that failure to respond satisfactorily could lead to legal consequences.

In response to inquiries, Gemini provided a nuanced reaction regarding PM Modi, acknowledging accusations of fascism while emphasizing differing perspectives on his governance. However, similar to previous incidents, the AI offered a generic response when questioned about Donald Trump.

Last year, concerns were raised when Gemini, then known as Bard, refused to summarize an article from a conservative outlet, citing misinformation concerns. Google clarified that Bard operates as an experimental model trained on publicly available data and doesn’t necessarily reflect the company’s standpoint.